熱門話題

#

Bonk 生態迷因幣展現強韌勢頭

#

有消息稱 Pump.fun 計劃 40 億估值發幣,引發市場猜測

#

Solana 新代幣發射平臺 Boop.Fun 風頭正勁

總是很高興看到更多的工作擴展 diloco 並減少預訓練的帶寬需求!

2025年8月22日

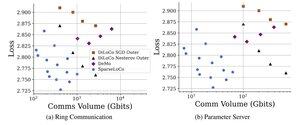

Introducing SparseLoCo: a communication-efficient method for LLM pre-training.

TL;DR: We leverage Top-k sparsification + error feedback with DiLoCo’s infrequent outer steps—communicating only 1–3% gradients with 2-bit quantization—outperforming DiLoCo and DeMo. 1/N,

ArXiv:

Github:

4.96K

熱門

排行

收藏